A Survey on Neural Network Interpretability

1.论文信息

作者: Yu Zhang, Peter Tiňo, Aleš Leonardis, Ke Tang

链接: https://arxiv.org/abs/2012.14261

这篇综述我看的时候都给看懵了,特别是从III到IV部分细讲passive和active分类下遍历其他两个分类维度下的各种方法,没怎么看懂,之后看了本文第一作者张宇的博客文章才略微有点启发:“一个好的综述应该能为该研究领域提供一个「坐标系」——包括一系列正交的分类维度” 1

当有了坐标定位,就可以清晰地看到在哪个方向是很有潜力却研究不足的

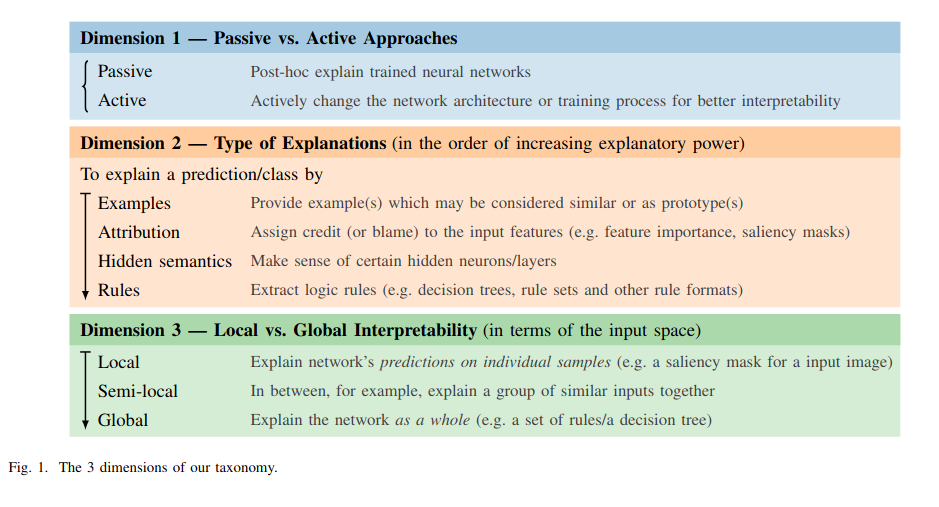

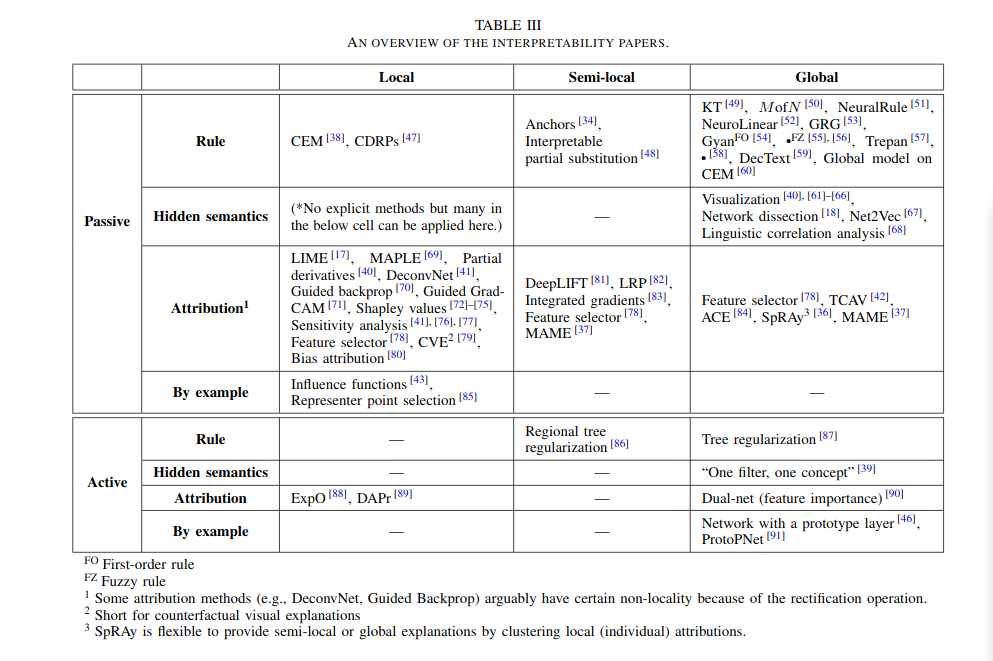

对于神经网络可解释性的文章可以以三个维度来分类:事后解释 vs 主动干预; 所产生的解释的表现形式; 解释的覆盖范围2

2.论文摘要与简介

Abstract

We first clarify the definition of interpretability as it has been used in many different contexts.

Then we elaborate on the importance of interpretability and propose a novel taxonomy organized along three dimensions: type of engagement (passive vs. active interpretation approaches),the type of explanation, and the focus (from local to global interpretability). This taxonomy provides a meaningful 3D view of distribution of papers from the relevant literature as two of the dimensions are not simply categorical but allow ordinal subcategories.

Finally, we summarize the existing interpretability evaluation methods and suggest possible research directions inspired by our new taxonomy.

INTRODUCTION

These observations suggest that even though DNNs can achieve superior performance on many tasks, their underlying mechanisms may be very different from those of humans’ and have not yet been well-understood.

An (Extended) Definition of Interpretability

Interpretability is the ability to provide explanations in understandable terms to a human.

We note that some studies distinguish between interpretability and explainability(or understandability,comprehensibility,transparency,human-simulatability etc.) In this paper we do not emphasize the subtle differences among those terms.

**The Importance of Interpretability**

However, a clearly organized exposition of such argumentation is missing. We summarize the arguments for the importance of interpretability into three groups.

1)High Reliability Requirement:

Interpretability is not always needed but it is important for some prediction systems that are required to be highly reliable because an error may cause catastrophic results (e.g.,human lives, heavy financial loss).

2) Ethical and Legal Requirement:

A first requirement is to avoid algorithmic discrimination.

Deep neural networks have also been used for new drug discovery and design

Another legal requirement of interpretability is the “right to explanation”

3) Scientific Usage:

Deep neural networks are becoming powerful tools in scientific research fields where the data may have complex intrinsic pattern.

Related Work and Contributions

There have already been attempts to summarize the techniques for neural network interpretability. However, most of them only provide basic categorization or enumeration, without a clear taxonomy.

This survey has the following contributions:

We make a further step towards the definition of interpretability on the basis of reference [15]. In this definition,we emphasize the type(or format)of explanations(e.g,rule forms, including both decision trees and decisionrule sets).

We analyse the real needs for interpretability and summarize them into 3 groups: interpretability as an important component in systems that should be highly-reliable,ethical or legal requirements, and interpretability providing tools to enhance knowledge in the relevant science fields.

We propose a new taxonomy comprising three dimensions(passive vs. active approaches, the format of explanations,and local-semilocal-global interpretability)

3.分类法

The first dimension is categorical and has two possible values,passive interpretation and active interpretability intervention.It divides the existing approaches according to whether they require to change the network architecture or the optimization process.

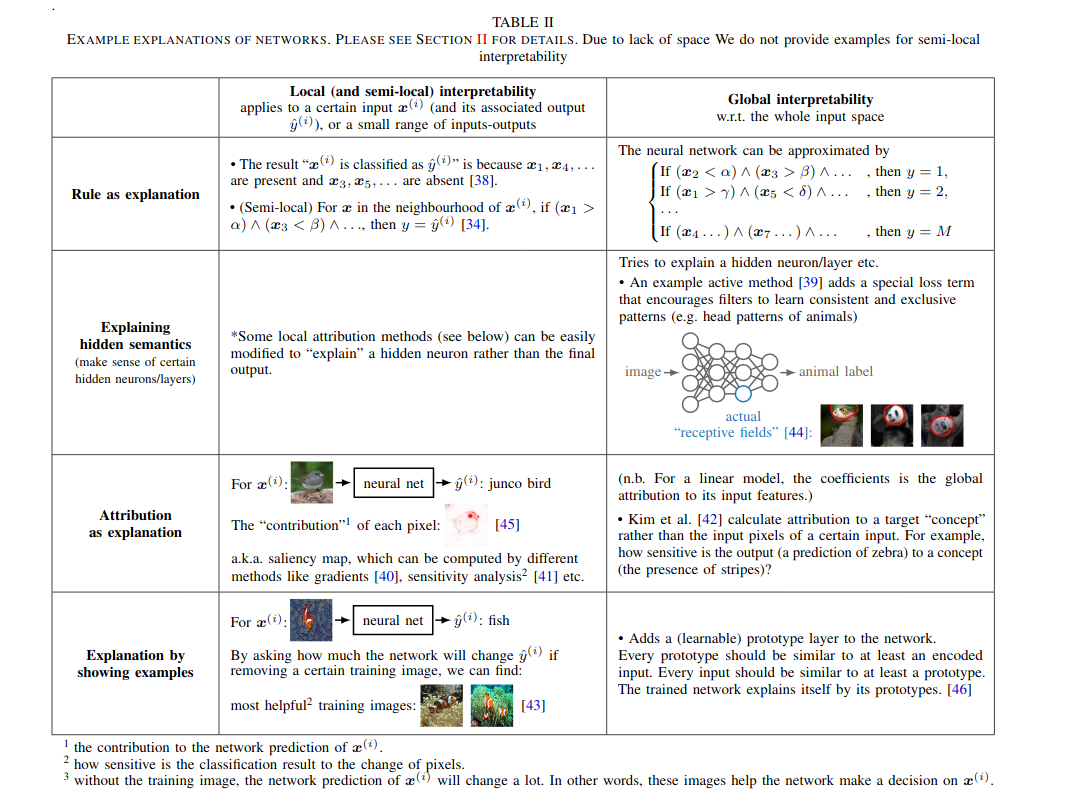

our second dimension is type/format of explanation. By inspecting various kinds of explanations produced by different approaches, we can observe differences in how explicit they are. We recognize four major types of explanations here,logic rules,hidden semantics,attribution and explanations by examples, listed in order of decreasing explanatory power.

The last dimension, from local to global interpretability(w.r.t. the input space), has become very common in recent papers

4.可解释性的测试

For logic rules and decision trees, the size of the extracted rule model is often used as a criterion

Network Dissection [18] quantifies the interpretability of hidden units by calculating their matchiness to certain concept

As for the hidden unit visualization approaches, there is no a good measurement yet.

For attribution approaches, their explanations are saliency maps/masks (or feature importance etc. according to the specific task)

5.讨论

Passive (post-hoc) methods have been widely studied because they can be applied in a relatively straightforward manner to most existing networks.

Active (interpretability intervention) methods put forward ideas about how a network should be optimized to gain interpretability

As for the second dimension, the format of explanations,logical rules are the most clear (do not need further human interpretation)

Hidden semantics essentially explain a subpart of a network,with most work developed in the computer vision field.

Attribution is very suitable for explaining individual inputs.

Explaining by providing an example has the lowest (the most implicit) explanatory power.

As for the last dimension, local explanations are more useful when we care more about every single prediction (e.g., a creditor insurance risk assessment)

For some research fields, such as genomics and astronomy, global explanations are more preferred as they may reveal some general “knowledge”.

6.结论

First, we have discussed the definition of interpretability and stressed the importance of the format of explanations and domain knowledge/representations.

Then, by reviewing the previous literature, we summarized 3 essential reasons why interpretability is important: the requirement of high reliability systems, ethical/legal requirements and knowledge finding forscience.

After that, we introduced a novel taxonomy for the existing network interpretation methods.

From the perspective of the new taxonomy, there are still several possible research directions in the interpretability research:

First, the active interpretability intervention approaches are underexplored

Another important research direction may be how to better incorporate domain knowledge in the networks.

参考:

- [1] 神经网络可解释性综述

- [2] 【综述专栏】神经网络可解释性综述