Measuring Mathematical Problem Solving With the MATH Dataset

1.论文信息

作者: Dan Hendrycks,Collin Burns,Saurav Kadavath,Akul Arora,Steven Basart,Eric Tang,Dawn Song,Jacob Steinhardt

机构: UC Berkeley,UChicago

链接: https://arxiv.org/abs/2103.03874

代码&数据集: https://github.com/hendrycks/math/tree/main/data_file_lists

对我而言,本文提出的数据集有三个亮点:

1)数据集中的分步解决方案能让语言模型像人类数学家一样使用“短期记忆”,即模型不必马上得出正确答案,而是可以逐步探索解法一步步走向正确答案。1

2)模型的大力出奇迹在本文数据集失效了(One area where just scaling things up doesn’t help)2,即使强如大规模的Transformer模型也无法大展身手,在数学问题求解上我们还有很长的路要走。

3)使用 LATEX 和 Asymptote 矢量图语言将数学问题及其解进行统一格式化3

2.论文摘要与简介

为了量化机器学习模型中的数学问题解决能力,本文提出了由12,500道有挑战性的竞赛数学题组成的新数据集MATH.MATH覆盖了高级解决问题技巧,但模型也许首先需要的是在基础数学中完整地被训练过,于是本文作者创建了第一个由数十万用自然语言和latex写的按部就班解决方法(step-by-step solutions)组成的大型数学预训练数据集AMPS(Auxiliary Mathematics Problems and Solution),其来源于Khan Academy 和Mathematica,大小为23GB.

3.相关工作

1)Neural Theorem Provers

Rather than prove theorems with standard pretrained Transformers, McAllester (2020) proposes that the community create theorem provers that bootstrap their mathematical capabilities through open-ended self-improvement. For bootstrapping to be feasible, models will also need to understand mathematics as humans write it, as manually converting advanced mathematics to a proof generation language is extremely time-consuming. This is why Szegedy(2020) argues that working on formal theorem provers alone will be an impractical path towards world-class mathematical reasoning. We address Szegedy (2020)’s concern by creating a dataset to test understanding of mathematics written in natural language and commonplace mathematical notation. This also means that the answers in our dataset can be assessed without the need for a cumbersome theorem proving environment, which is another advantage of our evaluation framework.

2)Neural Calculators

Lample and Charton (2020) use Transformers to solve algorithmically generated symbolic integration problems and achieve greater than 95% accuracy. Amini et al.(2019); Ling et al. (2017) introduce plug-and-chug multiple choice mathematics problems and focus on sequence-to-program generation. Saxton et al. (2019) introduce the DeepMind Mathematics dataset, which consists of algorithmically generated plug-and-chug problems such as addition, list sorting, and function evaluation.Recently, Henighan et al. (2020) show that nearly all of the DeepMind Mathematics dataset can be straightforwardly solved with large Transformers.

3)Benchmarks for Enormous Transformers

unlike our benchmark, which is a text generation task with 12,500 mathematical reasoning questions, their benchmark is a multiple choice task that includes only a few hundred questions about mathematics. We find that our MATH benchmark is especially challenging for current models and, if trends continue, simply using bigger versions of today’s Transformers will not solve our task in the foreseeable future.

4.数据集

1)The MATH Dataset

The MATH dataset consists of problems from mathematics competitions including the AMC 10, AMC 12, AIME, and more. The Mathematics Aptitude Test of Heuristics dataset, abbreviated MATH, has 12,500 problems (7,500 training and 5,000 test).

2)AMPS (Khan + Mathematica) Dataset

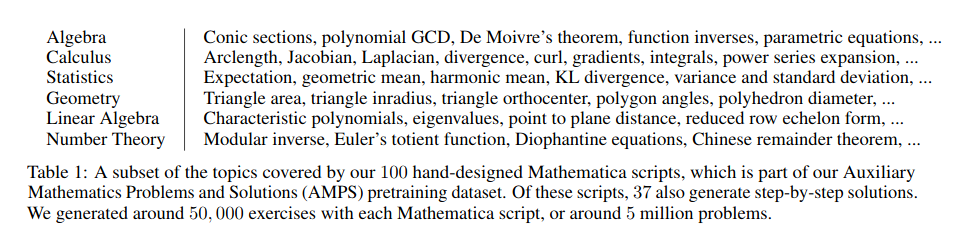

Our pretraining dataset, the Auxiliary Mathematics Problems and Solutions (AMPS) dataset, has problems and step-by-step solutions typeset in LATEX. AMPS contains over 100,000 problems pulled from Khan Academy and approximately 5 million problems generated from manually designed Mathematica scripts.

5.实验结果

We find that accuracy remains low even for the best models. Furthermore, unlike for most other text-based datasets, we find that accuracy is increasingly very slowly with model size. If trends continue, then we will need algorithmic improvements, rather than just scale, to make substantial progress on MATH. Nevertheless, we show that making progress is also possible today. We find that pretraining on AMPS increases relative accuracy by 25%, which is comparable to the improvement due to a 15× increase in model size.

We find that having models generate their own step-by-step solutions before producing an answer actually degrades accuracy. We find that providing partial ground truth step-by-step solutions can improve performance, and that providing models with step-by-step solutions at training time also increases accuracy.

We hypothesize that the drop in accuracy from using scratch space arises from a snowballing effect, in which partially generated “solutions” with mistakes can derail subsequent generated text.Nevertheless, when generation becomes more reliable and models no longer confuse themselves by their own generations,our dataset’s solutions could in principle teach models to use scratch space and attain higher accuracy.

We find that giving models partial step-by-step MATH solutions during inference can improve accuracy. We test performance when we allow models to predict the final answer given a “hint” in theform of a portion of the ground truth step-by-step solution.

Observe that the model still only attains approximately 40% when given 99% of the solution,indicating room for improvement.(敲黑板:提升模型间的有用写法)

6.结论

We introduced the MATH benchmark, which enables the community to measure mathematical problem-solving ability.In addition to having answers, all MATH problems also include answer explanations, which models can learn from to generate their own step-by-step solutions. We also introduce AMPS, a diverse pretraining corpus that can enable future models to learn virtually all of K-12 mathematics. While most other text-based tasks are already nearly solved by enormous Transformers, MATH is notably different. We showed that accuracy is slowly increasing and, if trends continue, the community will need to discover conceptual and algorithmic breakthroughs to attain strong performance on MATH. Given the broad reach and applicability of mathematics, solving the MATH dataset with machine learning would be of profound practical and intellectual significance.